What is Kubernetes?

Originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes, often abbreviated as K8s, has become the go-to open-source platform for automating the deployment, scaling, and management of containerized applications. Its rise in popularity reflects the growing trend towards microservices and the need for systems that can handle increasingly complex application architectures.

What is Kubernetes?

The Birth of Kubernetes: Solving the Complexity of Modern Infrastructure

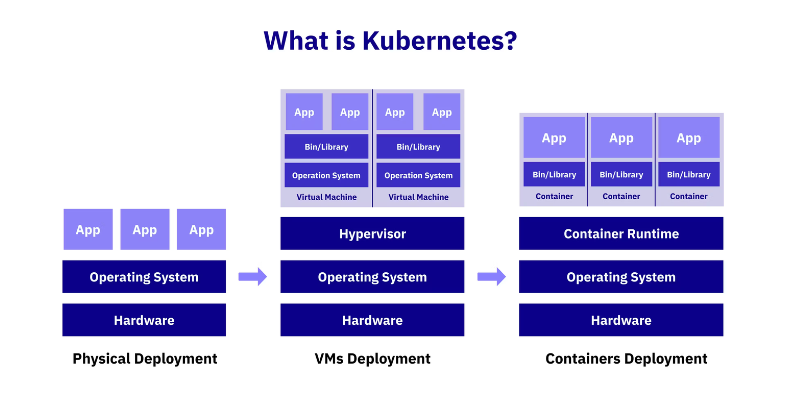

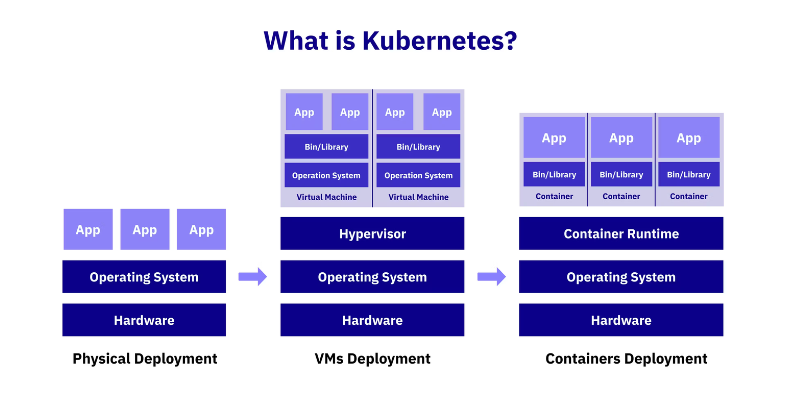

Before the advent of Kubernetes and container orchestration, infrastructure deployment followed a much more manual and resource-intensive process. Traditional deployment methods were based on monolithic architectures running on virtual machines (VMs) or even bare-metal servers. In these setups, entire applications were bundled together and deployed as a single unit on dedicated servers. This had several limitations and complexities:

- Resource Allocation and Scaling: Applications running on VMs were not resource-efficient. If a single service in the monolithic application needed more resources (e.g., more memory or CPU), the entire application would have to be scaled, leading to over-provisioning and wasted resources. Scaling was often rigid and required significant manual intervention.

- Environment Inconsistencies: When moving applications between development, testing, and production environments, differences in the operating system, libraries, or configurations could cause unexpected failures. "It works on my machine" became a common phrase due to these environmental inconsistencies.

- Dependency Management: In monolithic systems, different components of the application might require different versions of the same library or framework, leading to dependency conflicts. Maintaining a consistent environment across development, staging, and production was challenging, and updates to one part of the application often required updates to the entire system.

- Manual Configuration and Deployment: The deployment process was mostly manual, involving numerous steps such as configuring servers, managing network connections, setting up databases, and installing software dependencies. This process was error-prone, time-consuming, and required specialized operations teams to handle deployment tasks. Moreover, updates and rollbacks were risky and required significant downtime.

- Load Balancing and Fault Tolerance: Ensuring that applications could handle traffic spikes or hardware failures required manual setup of load balancers, replication across servers, and failover mechanisms. Achieving high availability often required significant infrastructure investment and complex configurations.

- Server Utilization: With traditional infrastructure, applications were often tightly coupled to the underlying hardware. This meant that each server could only host a few applications, leaving a large amount of server capacity underutilized. Scaling applications often meant adding more physical servers, increasing infrastructure costs.

- Vendor Lock-In: Many organizations depended on specific cloud providers or hardware vendors for their infrastructure. This lack of flexibility made it difficult to shift between different environments, hindering the adoption of hybrid or multi-cloud strategies.

The adoption of containers, such as Docker, revolutionized the deployment process by providing isolated environments for running applications, regardless of the underlying infrastructure. Containers allowed developers to package applications with all their dependencies into a single, portable unit that could run consistently across different environments (development, staging, production, etc.).

However, while containers made the deployment process simpler and more portable, managing containers at scale introduced a new set of challenges. For instance:

- How do you manage thousands of containers across hundreds of servers?

- How do you ensure that containers are automatically rescheduled if they fail?

- How do you handle networking between containers or scale them up and down based on traffic demands?

This is where Kubernetes stepped in, solving these challenges by automating the orchestration and management of containers.

What is Kubernetes Architecture?

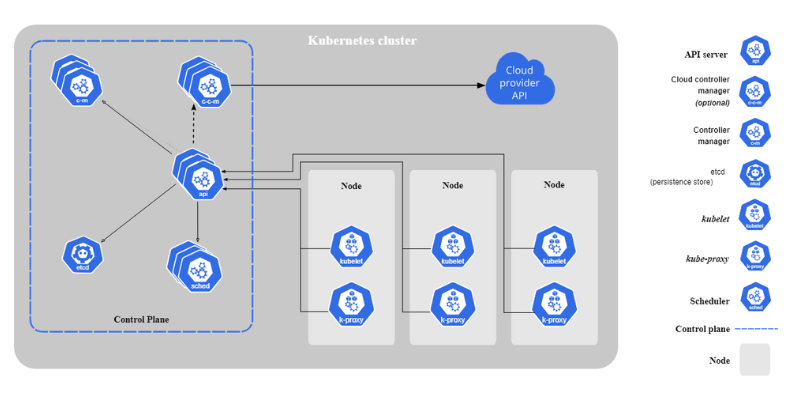

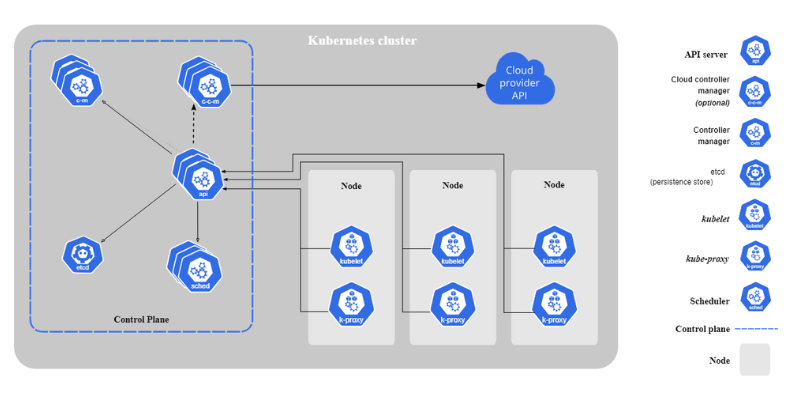

At the core of Kubernetes lies a highly modular and flexible architecture. It follows a master-worker node structure, where the master node manages the overall cluster, and worker nodes run the containerized applications. Below are key components of Kubernetes architecture:

- Kube-apiserver: It acts as the front-end for the Kubernetes control plane, interacting with components and exposing APIs to developers and system admins.

- Etcd: A key-value store used to store configuration data and the state of the cluster. It acts as the source of truth for Kubernetes.

- Kube-scheduler: Responsible for assigning workloads (containers) to different nodes based on resource availability, policies, and constraints.

- Kubelet: Runs on every worker node, ensuring containers are running as they should be. It manages container runtime and communicates with the Kubernetes API server.

- Kube-proxy: Facilitates networking within the cluster, ensuring seamless communication between different services

Kubernetes architecture

Benefits of Kubernetes

The various benefits of Kubernetes have made it the backbone of cloud-native applications across various industries. Here are the key ways in which Kubernetes solve real-world problems.

- Improved Developer Productivity: Kubernetes significantly simplifies the development process by abstracting the complexity of infrastructure management. Developers can focus on writing code, while Kubernetes handles deployment, scaling, and monitoring.

- Cost Optimization: By enabling automatic scaling, Kubernetes ensures that resources are only consumed when necessary, optimizing costs. For instance, during periods of low traffic, it can automatically scale down resources, reducing unnecessary spending.

- Multi-cloud and Hybrid Cloud Flexibility: One of the standout features of Kubernetes is its ability to run across multiple cloud platforms (AWS, Azure, GCP) or on-premises data centers. This gives organizations the flexibility to avoid vendor lock-in, leveraging the best features from different cloud providers.

- Disaster Recovery and High Availability: Kubernetes' self-healing and auto-replication capabilities make it an excellent tool for maintaining high availability. Organizations can distribute workloads across multiple regions, ensuring that downtime in one area does not lead to service disruptions.

Benefits of Kubernetes

Challenges and Considerations of Kubernetes

While Kubernetes offers significant benefits, it presents several challenges that organizations need to overcome to ensure a smooth, secure, and scalable operation.

- Complexity: Kubernetes is a powerful but complex platform. Its multi-layered architecture, including pods, services, and control planes, requires a steep learning curve and expertise to manage effectively.

- Networking: While Kubernetes abstracts networking, configuring network policies, services, and integrating with external networks can be challenging, often leading to performance or security issues if not properly managed.

- Observability: Monitoring distributed microservices across nodes requires advanced observability tools like Prometheus and Grafana. Without these, diagnosing performance issues in a dynamic environment becomes difficult.

- Cluster Stability: Ensuring the stability of the control plane and resources is critical. Mismanagement or capacity issues can cause downtime, despite Kubernetes’ self-healing capabilities.

- Security: Misconfigurations, such as open APIs or improper RBAC, pose security risks. Regular patching and secure access management are crucial to maintaining a safe cluster environment.

- Logging: Centralized logging is necessary to capture logs from ephemeral pods, requiring external log aggregation tools like ELK or Fluentd to ensure traceability and troubleshoot issues effectively.

- Storage: Managing persistent storage at scale requires careful coordination with storage backends. Misconfigurations can lead to data loss or performance issues, particularly in stateful applications.

By addressing these key areas, organizations can better manage the inherent challenges of running Kubernetes in production environments.

Conclusion

Kubernetes has redefined how modern applications are deployed and managed, offering a powerful platform for building scalable, resilient, and cloud-agnostic systems. For businesses looking to scale effectively in a multi-cloud world, Kubernetes is not just a tool - it’s an essential part of their technology stack.

At Sky Solution, we offer comprehensive DevOps services that include Kubernetes for seamless container orchestration. Our solutions are designed to help businesses streamline their application deployment, optimize resource management, and ensure smooth scalability. Ready to enhance your infrastructure with expert DevOps support? Contact Sky Solution today via 0947.369.997 and take your operations to the next level!